sebastiandaschner blog

Simple, Rolling-Update Production Setup With Docker & Traefik

tuesday, december 20, 2022For hosting our containerized web applications, we have tons of possibilities, from proprietary cloud-based solutions, to container orchestration powered by Kubernetes, and many others. Ideally the chosen technology already takes care of all the heavy lifting with regards to load-balancing, SSL certificates, rolling updates, and so on. However, for very simple, pragmatic and especially affordable hosting that should be always running, it makes a lot of sense to still use an own virtual or physical server and run our apps directly in the OS or in containers.

For my own few applications that I have, I was mostly running the applications directly on a server using Docker containers. NGINX generally does a quite good job as reverse proxy, but it can be a bit painful to set up the container networking in the way how you want them to be, especially with the ability to restart and update single applications without downtime.

Which is why I recently switched to using Traefik as simple, container-based load balancer for my production setup. In this post, I want to show how to run a Java-based application in Docker and make it accessible via Traefik load-balancer, including SSL certificates, custom domain, and of course rolling updates to not disturb our users.

Traefik

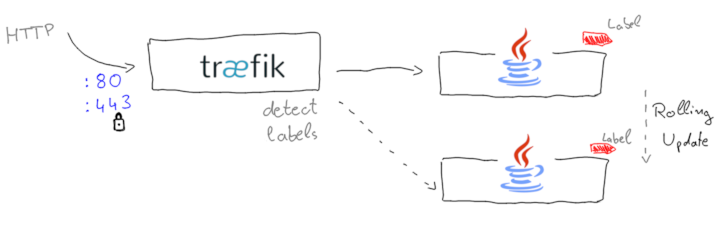

The Traefik Proxy nicely integrates with our Docker host and can auto-detect other containers based on their container labels.

This approach decouples the proxy networking configuration and is similar to how a Kubernetes Service works.

The Trafik load balancer sets up so-called entrypoints that define the outer-facing interface, for example HTTP via :80 or :443.

The routers and services can be defined by the backend containers in their labels, and are discovered automatically.

The basic setup is pretty straightforward. While the Traefik docs use Docker Compose, I’ll go a simpler route and just start two Docker containers:

docker run -d \

--name traefik \

-p 80:80 \

-p 8080:8080 \

-v /var/run/docker.sock:/var/run/docker.sock \

traefik:v2.9 \

--log.level=DEBUG \

--api.insecure=true \

--entrypoints.web.address=:80 \

--providers.docker=trueThis starts up the Traefik container with the default HTTP address and the additional dashboard, accessible under :8080.

In order to proxy to our application, we’ll start up this one as well. I’m using my Quarkus playground coffee example application:

docker run -d \

-l traefik.http.routers.coffee.rule='Host(`localhost`)' \

-l traefik.http.services.coffee-service.loadbalancer.server.port='8080' \

sdaschner/quarkus-coffee:traefik-demoThat’s already sufficient to run our example.

Now you can access http://localhost/ and see the result:

curl localhost -i

HTTP/1.1 200 OK

Content-Length: 7

Content-Type: application/octet-stream

Date: Fri, 04 Nov 2022 16:17:29 GMT

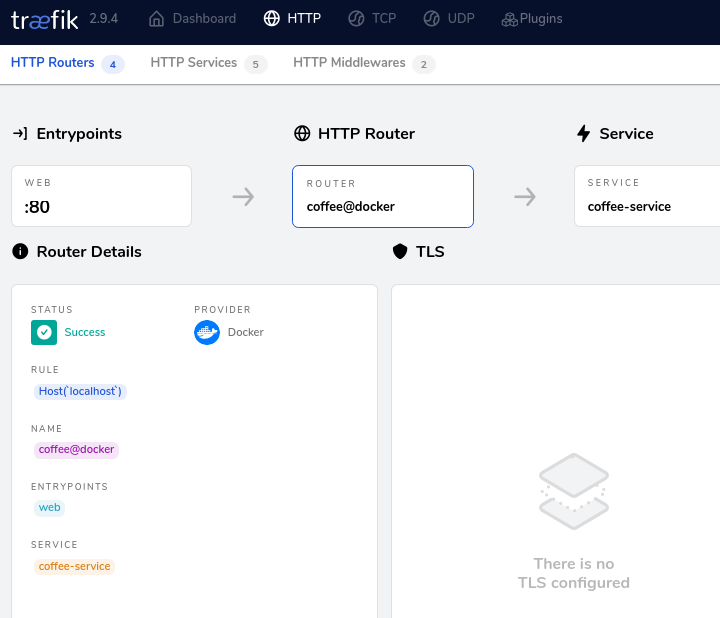

CoffeeIf you navigate to localhost:8080, you will see the Traefik Proxy dashboard that now shows the coffee-server that has been detected, along with the other default routings.

This dashboard can be helpful to verify your configuration.

Production Setup

Now that wasn’t bad for a “Hello world” setup, but on a real server you’d need proper startup scripts, SSL certs, an actual domain, and some way to perform updates without disrupting the users too much.

Even though our Quarkus application starts up really quickly, some way similar to what a Kubernetes Deployment offers would be nice.

So, let’s add a proper domain and certificates, some timeouts, and remove the debug logging and dashboard access.

You can stop and remove the previous two containers again; we start our Traefik container now a bit differently:

docker run -d \

--name traefik \

-p 80:80 \

-p 443:443 \

-v /var/run/docker.sock:/var/run/docker.sock \

-v $(pwd)/configuration/:/configuration/ \

traefik:v2.9 \

--entrypoints.web.address=:80 \

--entrypoints.web.http.redirections.entrypoint.scheme=https \

--entrypoints.web.http.redirections.entrypoint.to=web-secure \

--entrypoints.web-secure.address=:443 \

--providers.docker=true \

--providers.file.directory=/configuration/ \

--providers.file.watch=true \

--serverstransport.forwardingtimeouts.dialtimeout=2We’ve added port :443, a file configuration, auto redirection from HTTP to HTTPS, and a shorter timeout to our backend (2 seconds instead of the default 30).

The configuration/ directory will contain the following YAML file, and our certificates.

ls configuration/

coffee.example.com.crt coffee.example.com.key traefik.ymlIn case you need a test self-signed wildcard certificate, you can create one as follows:

openssl req -x509 -out coffee.example.com.crt -keyout coffee.example.com.key \

-newkey rsa:2048 -nodes -sha256 \

-subj '/CN=example.com' -extensions EXT -config <( \

printf "[dn]\nCN=example.com\n[req]\ndistinguished_name = dn\n[EXT]\nsubjectAltName=DNS:*.example.com\nkeyUsage=digitalSignature\nextendedKeyUsage=serverAuth")The traefik.yml file looks as follows:

tls:

certificates:

- certFile: /configuration/coffee.example.com.crt

keyFile: /configuration/coffee.example.com.keyThis file contains so-called dynamic configuration that can be updated at runtime, which comes in very handy when we’re rolling our certs. It will be watched while Traefik is running.

For the application, we make sure Traefik knows about our health checks to make startups a bit smoother for the reverse proxy, as well as the proper domain.

The Quarkus backend is now started as follows:

docker run -d \

--name coffee-$(date +%s) \

-l app='coffee' \

-l traefik.http.routers.coffee.rule='Host(`coffee.example.com`)' \

-l traefik.http.routers.coffee.tls='true' \

-l traefik.http.services.coffee-service.loadbalancer.server.port='8080' \

-l traefik.http.services.coffee-service.loadbalancer.healthcheck.path='/q/health' \

-l traefik.http.services.coffee-service.loadbalancer.healthcheck.interval='2s' \

-l traefik.http.services.coffee-service.loadbalancer.healthcheck.timeout='2s' \

sdaschner/quarkus-coffee:traefik-demoWe also name the container in a schema like coffee-1667631887 and give it another label (app=coffee) for us to detect it.

This is helpful in case we want to build some scripts around that can perform a rolling update (see below).

When you start up these two updated containers, you can test the access as follows:

curl https://coffee.example.com/ \

--resolve coffee.example.com:443:192.168.X.X \

--insecureCurl can --resolve the domain coffee.example.com domain locally to your IP address.

Under Linux you could also edit your /etc/hosts.

Simple Rolling Update

Even for very basic setups it would be nice if we can deploy a new backend version or rotate the certificates without having a long downtime. What we can do in our setup is to perform a rolling update by starting up a new container with the new image and having this being taken into the Traefik load balancing, accessing both containers for a short period of time, and then stopping the old container.

The following is now a bit more experimental, or should we say pragmatic, but when trying out you can come up with a pragmatic approach that works for your setup quickly.

What we want to do is the following:

-

The

traefikandcoffee-123containers are running -

We start new container

coffee-234with updated image -

We wait for

coffee-234to be started up and healthy -

Optional: we disable the health check of

coffee-123(if supported) -

We stop container

coffee-123

The optional step of disabling the old container’s health check can smooth out the switch, as well as using some other mechanisms such as proxy retries, which are in fact supported by Traefik. I encourage you to test the update and traffic switching under some simulated load of many HTTP requests to see how the applications will actually behave.

For now, we can write up some pragmatic shell scripts similar to the following.

ls

configuration rolling-update.sh run-coffee.sh run-traefik.sh server.envThe run-* scripts now container the docker run definitions, the server.env is an environment file in which we specify our Docker images, and the rolling-update.sh script looks as follows:

#!/bin/bash

set -euo pipefail

cd ${0%/*}

echo "Starting new coffee container and waiting for startup ..."

container=$(./run-coffee.sh)

newId=${container::12}

timeout=20

# wait for coffee startup

while [[ "$(docker exec $container curl -s -o /dev/null -w ''%{http_code}'' http://localhost:8080/q/health)" != "200" ]]; do

sleep 1

timeout=$((timeout-1))

if [[ $timeout == 0 ]]; then

echo "ERROR! Startup failed"

exit 1

fi

done

echo "Container $newId started"

echo "Waiting 10 secs and stopping old containers"

sleep 10

# stop and remove all coffee containers except new one

containers=$(docker ps -q --filter=label=app=coffee | grep -v $newId)

docker stop $containers

docker rm $containers &> /dev/nullThe Docker images are defined in server.env, or could alternatively be passed at script execution time:

COFFEE_IMAGE=sdaschner/quarkus-coffee:traefik-demoOur run-coffee.sh script makes use of this environment variable:

#!/bin/bash

set -euo pipefail

cd ${0%/*}

source server.env

docker run -d \

--name coffee-$(date +%s) \

[...] same as before

$COFFEE_IMAGEWith these, we can start up our Traefik Proxy (./run-traefik.sh), our coffee backend (./run-coffee.sh), and after everything is up and running, update the image version in server.env to sdaschner/quarkus-coffee:traefik-demo-2 and invoke our rolling update script:

./rolling-update.sh

Starting new coffee container and waiting for startup ...

Container 797c368af374 started

Waiting 10 secs and stopping old containers

2e621845af47In the mean time, you can still access your application:

curl https://coffee.example.com/ --resolve coffee.example.com:443:<IP> --insecure

Coffee

[repeat ...]

curl https://coffee.example.com/ --resolve coffee.example.com:443:<IP> --insecure

Coffee ☕!To take this further, you can experiment with disabling the health check on the old container before it’s stopped, as well as with retries (can be annotated on your backend containers). As always, test your setup and update mechanism under some simulated load to see how it will behave.

Conclusion

For containerized workloads, Traefik Proxy does a great job as easy-to-configure reverse proxy that detects backends based on other running Dockers containers and their labels, which works well for simpler production environments. Especially the fact that Traefik doesn’t have to be restarted for the common reconfiguration operations comes in handy.

More Information

To learn more, you can check out the following resources:

What also helps a lot is to run the Traefik Proxy help to get all available options when starting the proxy: traefik --help, e.g. in:

docker run --rm traefik:v2.9 --help | lessHappy proxying!

Found the post useful? Subscribe to my newsletter for more free content, tips and tricks on IT & Java: